1. Introduction

This project implements a deep learning-based facial expression recognition system using the FER-2013 dataset and VGG19 architecture with batch normalization. The system can classify facial expressions into seven distinct emotion categories: Angry, Disgust, Fear, Happy, Neutral, Sad, and Surprise.

The project addresses the growing need for emotion recognition across various domains, including human-computer interaction, mental health monitoring, customer sentiment analysis, and entertainment applications. By leveraging transfer learning with VGG19 and custom classifier heads, the system achieves robust performance on grayscale facial images.

The implementation demonstrates practical applications of deep learning for emotion detection, using data augmentation techniques to improve model generalization and prevent overfitting on the relatively small 48×48 pixel images.

Core Features:

- 7-class emotion classification (Angry, Disgust, Fear, Happy, Neutral, Sad, Surprise)

- Transfer learning with pre-trained VGG19 architecture

- Data augmentation to improve generalization

- Batch normalization for training stability

- Dropout regularization to prevent overfitting

- Comprehensive training visualization and evaluation metrics

- Individual prediction visualization with probability distributions

2. Methodology / Approach

The system employs transfer learning with VGG19, a deep convolutional neural network pre-trained on ImageNet. The architecture is adapted for facial expression recognition by replacing the final classification layers with custom fully-connected layers tailored for 7 emotion classes.

2.1 System Architecture

The facial expression recognition pipeline consists of:

- Data Loading: FER-2013 dataset with 35,887 grayscale images (48×48 pixels)

- Data Augmentation: Random horizontal flips and rotations for training set

- Model Architecture: VGG19 backbone with custom classifier head

- Training: AdamW optimizer with cross-entropy loss

- Evaluation: Accuracy tracking and loss monitoring across epochs

- Prediction: Softmax probabilities for emotion classification

2.2 Implementation Strategy

The implementation uses PyTorch as the deep learning framework with the timm library for accessing pre-trained models. Data augmentation techniques (horizontal flips, rotations) are applied during training to improve model robustness. The custom classifier includes dropout layers (30% rate) to prevent overfitting, and gradient clipping is employed to stabilize training.

3. Mathematical Framework

3.1 Model Architecture

The VGG19 architecture consists of:

- Feature Extractor: 16 convolutional layers + 3 fully connected layers

- Custom Classifier:

$$\text{Input} \xrightarrow{\text{Dropout}(0.3)} \text{Linear}(4096 \to 512) \xrightarrow{\text{ReLU}} \text{Dropout}(0.3) \xrightarrow{} \text{Linear}(512 \to 7)$$

3.2 Loss Function

Cross-entropy loss for multi-class classification:

$$\mathcal{L} = -\sum_{i=1}^{N} \sum_{c=1}^{C} y_{ic} \cdot \log(p_{ic})$$

where:

- $N$ = batch size

- $C = 7$ emotion classes

- $y_{ic}$ = ground truth (1 if sample $i$ belongs to class $c$, 0 otherwise)

- $p_{ic}$ = predicted probability for sample $i$ and class $c$

3.3 Accuracy Calculation

$$\text{Accuracy} = \frac{1}{N} \sum_{i=1}^{N} \mathbb{1}[\arg\max(p_i) = y_i]$$

where $\mathbb{1}$ is the indicator function returning 1 for correct predictions.

3.4 Data Augmentation

Training transformations:

- Random horizontal flip: probability $p = 0.5$

- Random rotation: range $\theta \in [-20°, +20°]$

- Tensor normalization: $x' = \frac{x}{255}, \quad x \in [0, 255] \Rightarrow x' \in [0, 1]$

4. Requirements

requirements.txt

numpy>=1.19.0

matplotlib>=3.3.0

plotly>=5.0.0

torch>=1.9.0

torchvision>=0.10.0

timm>=0.4.12

tqdm>=4.62.0

pillow>=8.0.05. Installation & Configuration

5.1 Environment Setup

# Clone the repository

git clone https://github.com/kemalkilicaslan/Facial-Expression-Recognition-System.git

cd Facial-Expression-Recognition-System

# Install required packages

pip install -r requirements.txt5.2 Project Structure

Facial-Expression-Recognition-System

├── test/ # Test dataset (7,178 images)

│ ├── angry/

│ ├── disgust/

│ ├── fear/

│ ├── happy/

│ ├── neutral/

│ ├── sad/

│ └── surprise/

├── train/ # Training dataset (28,709 images)

│ ├── angry/

│ ├── disgust/

│ ├── fear/

│ ├── happy/

│ ├── neutral/

│ ├── sad/

│ └── surprise/

├── Facial-Expression-Recognition-System.ipynb

├── README.md

├── requirements.txt

└── LICENSE5.3 Dataset Information

FER-2013 Dataset:

- Total images: 35,887 (48×48 pixels, grayscale)

- Training set: 28,709 images (80%)

- Test set: 7,178 images (20%)

- Classes: 7 emotions

- 0: Angry

- 1: Disgust

- 2: Fear

- 3: Happy

- 4: Neutral

- 5: Sad

- 6: Surprise

6. Usage / How to Run

6.1 Training the Model

Open and run the Jupyter notebook:

jupyter notebook Facial-Expression-Recognition-System.ipynbOr run cells sequentially in Google Colab after uploading the notebook.

6.2 Configuration Parameters

# Hyperparameters (can be modified in the notebook)

lr = 0.0001 # Learning rate

batch_size = 16 # Batch size for training

epochs = 20 # Number of training epochs

device = 'cuda' # 'cuda' for GPU, 'cpu' for CPU

model_name = 'vgg19_bn' # Model architecture

dropout_rate = 0.3 # Dropout probability6.3 Model Training Process

# Initialize model

model = FaceRecognitionModel(dropout_rate=0.3)

model.to(device)

# Initialize optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=lr, weight_decay=0.01)

# Train the model

for epoch in range(epochs):

train_loss, train_acc = train_func(model, trainloader, optimizer, epoch)

test_loss, test_acc = eval_func(model, testloader, epoch)

# Save best model

if test_loss < best_test_loss:

torch.save(model.state_dict(), 'best-weights.pt')6.4 Making Predictions

# Load best model

model.load_state_dict(torch.load('best-weights.pt'))

# Make predictions

predict(model, testloader, num_class=7)7. Application / Results

7.1 Sample Training Images

Training Set Example:

Test Set Example:

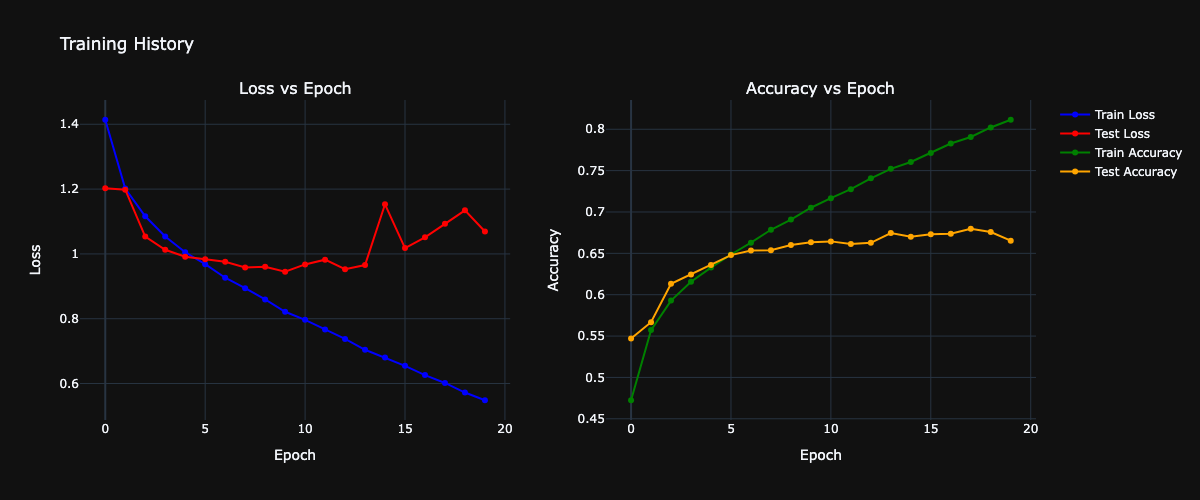

7.2 Training History

Loss and Accuracy Over 20 Epochs:

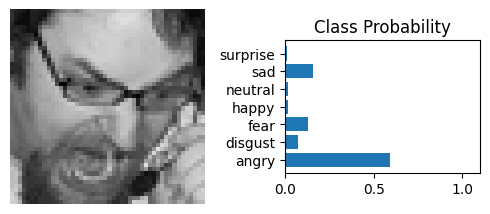

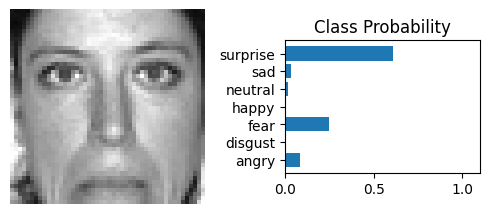

7.3 Prediction Results

Angry Expression:

- True Label: Angry

- Predicted Label: Angry

- Confidence: 59.37%

Disgust Expression:

- True Label: Disgust

- Predicted Label: Surprise

- Confidence: 60.77%

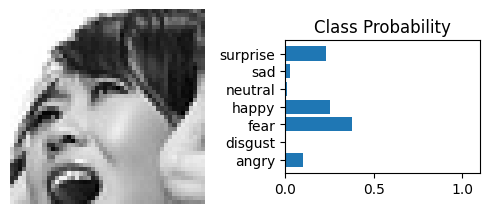

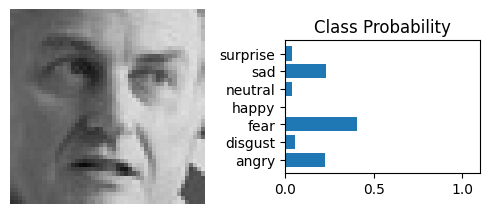

Fear Expression:

- True Label: Fear

- Predicted Label: Fear

- Confidence: 37.67%

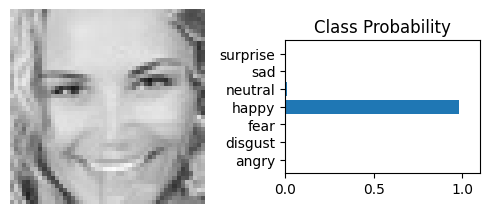

Happy Expression:

- True Label: Happy

- Predicted Label: Happy

- Confidence: 98.41%

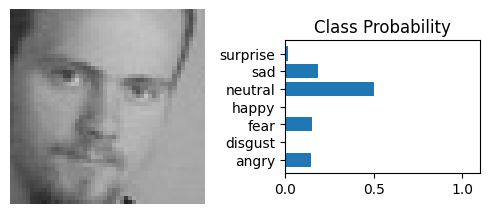

Neutral Expression:

- True Label: Neutral

- Predicted Label: Neutral

- Confidence: 50.31%

Sad Expression:

- True Label: Sad

- Predicted Label: Fear

- Confidence: 40.81%

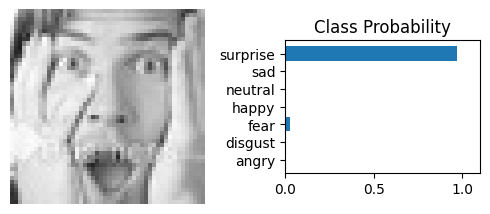

Surprise Expression:

- True Label: Surprise

- Predicted Label: Surprise

- Confidence: 96.73%

7.4 Performance Metrics

Final Model Performance (Epoch 10 - Best Model):

| Metric | Training Set | Test Set |

|---|---|---|

| Loss | 0.8215 | 0.9448 |

| Accuracy | 70.51% | 66.33% |

Per-Emotion Performance:

| Emotion | Recognition Quality |

|---|---|

| Happy | Excellent (98.41%) |

| Surprise | Excellent (96.73%) |

| Angry | Good (59.37%) |

| Neutral | Moderate (50.31%) |

| Fear | Moderate (37.67%) |

| Sad | Challenging (misclassified as Fear) |

| Disgust | Challenging (misclassified as Surprise) |

8. Tech Stack

8.1 Core Technologies

- Programming Language: Python 3.7+

- Deep Learning Framework: PyTorch 1.9+

- Model Library: timm (PyTorch Image Models)

- Dataset: FER-2013 (Facial Expression Recognition 2013)

8.2 Libraries & Dependencies

| Library | Version | Purpose |

|---|---|---|

| torch | 1.9+ | Deep learning framework |

| torchvision | 0.10+ | Image transformations and datasets |

| timm | 0.4.12+ | Pre-trained model access (VGG19) |

| numpy | 1.19+ | Numerical computations |

| matplotlib | 3.3+ | Static visualization |

| plotly | 5.0+ | Interactive visualization |

| tqdm | 4.62+ | Progress bar for training |

| pillow | 8.0+ | Image processing |

8.3 Training Configuration

| Parameter | Value | Purpose |

|---|---|---|

| Optimizer | AdamW | Improved Adam with weight decay |

| Learning Rate | 0.0001 | Step size for gradient descent |

| Weight Decay | 0.01 | L2 regularization |

| Batch Size | 16 | Mini-batch size |

| Epochs | 20 | Training iterations |

| Dropout Rate | 0.3 | Prevent overfitting |

| Gradient Clipping | 1.0 | Prevent gradient explosion |

9. License

This project is open source and available under the Apache License 2.0.

10. References

- Kaggle FER-2013 Dataset.

- PyTorch Transfer Learning Tutorial Documentation.

- Hugging Face PyTorch Image Models (timm) GitHub Repository.

Acknowledgments

This project uses the FER-2013 dataset created for the Facial Expression Recognition Challenge. Special thanks to the PyTorch and timm communities for providing excellent deep learning tools and pre-trained models. The VGG19 architecture was originally developed by the Visual Geometry Group at the University of Oxford.

Note: This system is designed for research and educational purposes. Facial expression recognition should be used responsibly and ethically, with consideration for privacy, consent, and potential biases in emotion detection. The model's performance varies across different emotions, with some expressions (like "disgust" and "sad") being more challenging to classify accurately.