1. Introduction

This project implements a comprehensive computer vision system for real-time traffic sign recognition while ensuring privacy protection through automatic blurring of vehicle plates and individuals. Designed for autonomous vehicle applications, the system employs multiple YOLO models to detect traffic signs, identify vehicles, and maintain privacy in video surveillance scenarios.

The project addresses the growing need for intelligent transportation systems that balance functionality with privacy protection. By combining traffic sign recognition with GDPR-compliant privacy features, the system provides a complete solution for autonomous vehicles and smart city applications. The implementation demonstrates practical applications of multi-model computer vision pipelines for real-world traffic monitoring scenarios.

Core Features:

- Real-time traffic sign recognition (24 different signs)

- Automatic vehicle plate blurring for privacy protection

- Person detection and blurring for GDPR compliance

- Specialized expertise for Turkish vehicle plates

- Multi-model YOLO architecture for robust detection

- Annotated and privacy-protected video recording

- Ready for autonomous vehicle integration

2. Methodology / Approach

The system employs a sophisticated multi-model architecture using three specialized YOLOv11 models, each optimized for specific detection tasks. This modular approach ensures high accuracy across all functions while maintaining real-time performance.

2.1 System Architecture

The traffic monitoring pipeline consists of multiple processing stages:

- Traffic Sign Detection: Custom-trained YOLOv11l model recognizes 24 urban traffic signs

- Vehicle Detection: Pre-trained YOLOv11l identifies cars, motorcycles, buses, and trucks

- Vehicle Plate Detection: Specialized model trained on Turkish plates for privacy masking

- Person Detection: YOLO-based person identification for GDPR compliance

- Privacy Protection: Gaussian blur application to sensitive regions

- Annotation & Recording: Visual overlays and video output generation

2.2 Implementation Strategy

The implementation leverages Ultralytics YOLOv11 framework for all detection tasks. Each model operates independently but sequentially in the pipeline, allowing for modular updates and improvements. The traffic sign model uses high confidence thresholds (0.8) to ensure safety-critical accuracy, while vehicle plate and person detection use moderate thresholds (0.55) for comprehensive privacy protection.

Processing Flow:

Video Frame → Traffic Sign Detection → Vehicle Detection → Plate Detection → Person Detection → Blur Application → Annotation → OutputDetection confidence thresholds are carefully tuned:

- Traffic Signs: 80% confidence (safety-critical, must be accurate)

- Vehicle Plates: 55% confidence (err on side of privacy)

- Persons: 55% confidence (comprehensive privacy protection)

- Vehicles: No threshold (contextual detection for plate location)

2.3 Privacy Protection Strategy

The system implements a two-stage privacy protection mechanism:

Stage 1 - Person Detection:

- Full-body detection using YOLOv11l

- Complete region blurring regardless of pose or orientation

- Large Gaussian kernel (99×99, σ=30) for strong anonymization

Stage 2 - Vehicle Plate Detection:

- Contextual detection within vehicle bounding boxes

- Turkish plate-specific model for accuracy

- Extreme blur (255×255 kernel, σ=30) for complete unreadability

This approach ensures privacy compliance while maintaining traffic sign visibility for analysis.

3. Mathematical Framework

3.1 YOLO Detection Algorithm

YOLOv11 processes images through a single neural network, dividing the input into an $S \times S$ grid. For each grid cell, the model predicts bounding boxes and class probabilities:

Bounding Box Prediction:

$$\mathbf{B} = [x, y, w, h, c]$$

where:

- $(x, y)$ = box center coordinates (relative to grid cell)

- $(w, h)$ = box width and height (relative to image)

- $c$ = confidence score (objectness)

Class Prediction:

$$P(C_i|\text{Object}) = \text{softmax}(\mathbf{z}_i)$$

where $\mathbf{z}_i$ are the logits for class $i$.

Final Detection Score:

$$\text{Score}_{i,j} = P(\text{Object}) \times P(C_j|\text{Object})$$

Detections with $\text{Score}_{i,j} < \tau$ (threshold) are filtered out:

- Traffic signs: $\tau = 0.8$

- Vehicle plates: $\tau = 0.55$

- Persons: $\tau = 0.55$

3.2 Non-Maximum Suppression (NMS)

To eliminate duplicate detections, NMS is applied based on Intersection over Union (IoU):

IoU Calculation:

$$\text{IoU}(B_1, B_2) = \frac{\text{Area}(B_1 \cap B_2)}{\text{Area}(B_1 \cup B_2)}$$

NMS Algorithm:

- Sort detections by confidence score (descending)

- Select highest confidence detection $B_{\max}$

- Remove all $B_i$ where $\text{IoU}(B_i, B_{\max}) > \tau_{\text{NMS}}$ (typically 0.45)

- Repeat until no detections remain

3.3 Gaussian Blur Operation

Privacy protection is achieved through Gaussian blur, a convolution operation with a Gaussian kernel:

2D Gaussian Function:

$$G(x, y) = \frac{1}{2\pi\sigma^2} e^{-\frac{x^2 + y^2}{2\sigma^2}}$$

where $\sigma$ is the standard deviation controlling blur strength.

Convolution Operation:

$$I'(x, y) = \sum_{i=-k}^{k} \sum_{j=-k}^{k} I(x+i, y+j) \cdot G(i, j)$$

where:

- $I(x, y)$ = original image intensity at pixel $(x, y)$

- $I'(x, y)$ = blurred image intensity

- $k$ = kernel radius (half of kernel size)

Person Blur Parameters:

- Kernel size: $99 \times 99$ pixels

- Standard deviation: $\sigma = 30$

- Blur strength: Moderate (recognizable as human, unidentifiable)

Vehicle Plate Blur Parameters:

- Kernel size: $255 \times 255$ pixels

- Standard deviation: $\sigma = 30$

- Blur strength: Extreme (complete unreadability)

3.4 Loss Functions (Model Training)

The YOLOv11 models were trained using a composite loss function:

Total Loss:

$$\mathcal{L}_{\text{total}} = \lambda_{\text{box}} \mathcal{L}_{\text{box}} + \lambda_{\text{cls}} \mathcal{L}_{\text{cls}} + \lambda_{\text{obj}} \mathcal{L}_{\text{obj}}$$

Box Regression Loss (CIoU):

$$\mathcal{L}_{\text{box}} = 1 - \text{CIoU} + \frac{\rho^2(\mathbf{b}, \mathbf{b}^{gt})}{c^2} + \alpha v$$

where:

- $\text{CIoU}$ = Complete IoU

- $\rho$ = Euclidean distance between box centers

- $c$ = diagonal of smallest enclosing box

- $v$ = aspect ratio consistency

- $\alpha$ = trade-off parameter

Classification Loss (Binary Cross-Entropy):

$$\mathcal{L}_{\text{cls}} = -\sum_{i=1}^{C} \left[ y_i \log(\hat{p}_i) + (1-y_i)\log(1-\hat{p}_i) \right]$$

where:

- $C$ = number of classes (24 for traffic signs, 1 for plates, 1 for persons)

- $y_i$ = ground truth (1 if class present, 0 otherwise)

- $\hat{p}_i$ = predicted probability for class $i$

Objectness Loss:

$$\mathcal{L}_{\text{obj}} = -\sum_{i=1}^{N} \left[ \mathbb{1}_i^{\text{obj}} \log(\hat{c}_i) + (1-\mathbb{1}_i^{\text{obj}})\log(1-\hat{c}_i) \right]$$

where:

- $N$ = number of predictions

- $\mathbb{1}_i^{\text{obj}}$ = indicator function (1 if object exists, 0 otherwise)

- $\hat{c}_i$ = predicted objectness confidence

3.5 Performance Metrics

Precision: Accuracy of positive predictions

$$\text{Precision} = \frac{TP}{TP + FP}$$

Recall: Ability to find all positive instances

$$\text{Recall} = \frac{TP}{TP + FN}$$

F1 Score: Harmonic mean of precision and recall

$$F_1 = 2 \times \frac{\text{Precision} \times \text{Recall}}{\text{Precision} + \text{Recall}}$$

Mean Average Precision (mAP):

$$\text{mAP} = \frac{1}{C} \sum_{i=1}^{C} \text{AP}_i$$

where $\text{AP}_i$ is the Average Precision for class $i$, calculated as the area under the Precision-Recall curve.

3.6 Coordinate Transformations

Bounding Box Center Calculation:

$$x_{\text{center}} = \frac{x_1 + x_2}{2}, \quad y_{\text{center}} = \frac{y_1 + y_2}{2}$$

where $(x_1, y_1)$ and $(x_2, y_2)$ are the top-left and bottom-right corners.

Text Centering (Traffic Sign Labels):

$$x_{\text{text}} = x_1 + \frac{(x_2 - x_1) - w_{\text{text}}}{2}$$

$$y_{\text{text}} = y_1 - 10$$

where $w_{\text{text}}$ is the width of the label text.

4. Requirements

requirements.txt

opencv-python>=4.5.0

numpy>=1.21.0

ultralytics>=8.0.05. Installation & Configuration

5.1 Environment Setup

# Clone the repository

git clone https://github.com/kemalkilicaslan/Traffic-Signs-Recognition-Vehicle-Plate-and-Person-Blurring-System.git

cd Traffic-Signs-Recognition-Vehicle-Plate-and-Person-Blurring-System

# Install required packages

pip install -r requirements.txt5.2 Project Structure

Traffic-Signs-Recognition-Vehicle-Plate-and-Person-Blurring-System/

├── Traffic-Signs-Recognition-Vehicle-Plate-and-Person-Blurring-System.py

├── README.md

├── requirements.txt

└── LICENSE5.3 Required Models

Download and place these model files in the project directory:

- Vehicle-Plates-of-Vehicles-in-Turkey.pt - Turkish vehicle plate detection model

- Traffic-Signs-Recognition.pt - Traffic sign classification model

- yolo11l.pt - YOLOv11 large model (auto-downloads on first run)

6. Usage / How to Run

6.1 Basic Execution

python Traffic-Signs-Recognition-Vehicle-Plate-and-Person-Blurring-System.py6.2 Configuration

Update the video filename in the script:

video = 'Traffic.mp4' # Change to your video file6.3 Adjusting Detection Thresholds

Modify confidence thresholds based on your requirements:

# In the script, modify these parameters:

# Traffic sign detection (high precision for safety)

traffic_signs_results = traffic_signs_detection_model(frame, conf=0.8)

# Person detection (comprehensive privacy)

yolov11_results = YOLO_model(frame, conf=0.55)

# Vehicle plate detection (within vehicle regions)

plate_results_in_vehicle = vehicle_plate_detection_model(vehicle_region, conf=0.55)6.4 Customizing Blur Strength

Adjust Gaussian blur parameters for different privacy requirements:

# Person blurring (moderate anonymization)

blurred_person = cv2.GaussianBlur(person_region, (99, 99), 30)

# Vehicle plate blurring (extreme unreadability)

blurred_plate = cv2.GaussianBlur(plate_region, (255, 255), 30)

# Custom blur settings:

# - Kernel size must be odd numbers (e.g., 51, 99, 151, 255)

# - Larger kernels = stronger blur

# - Sigma (last parameter) controls blur spread6.5 Output

The processed video is saved as:

Traffic-Signs-Recognition-Vehicle-Plate-and-Person-Blurring.mp47. Application / Results

7.1 Input Video

7.2 Output Video

7.3 Model Performance

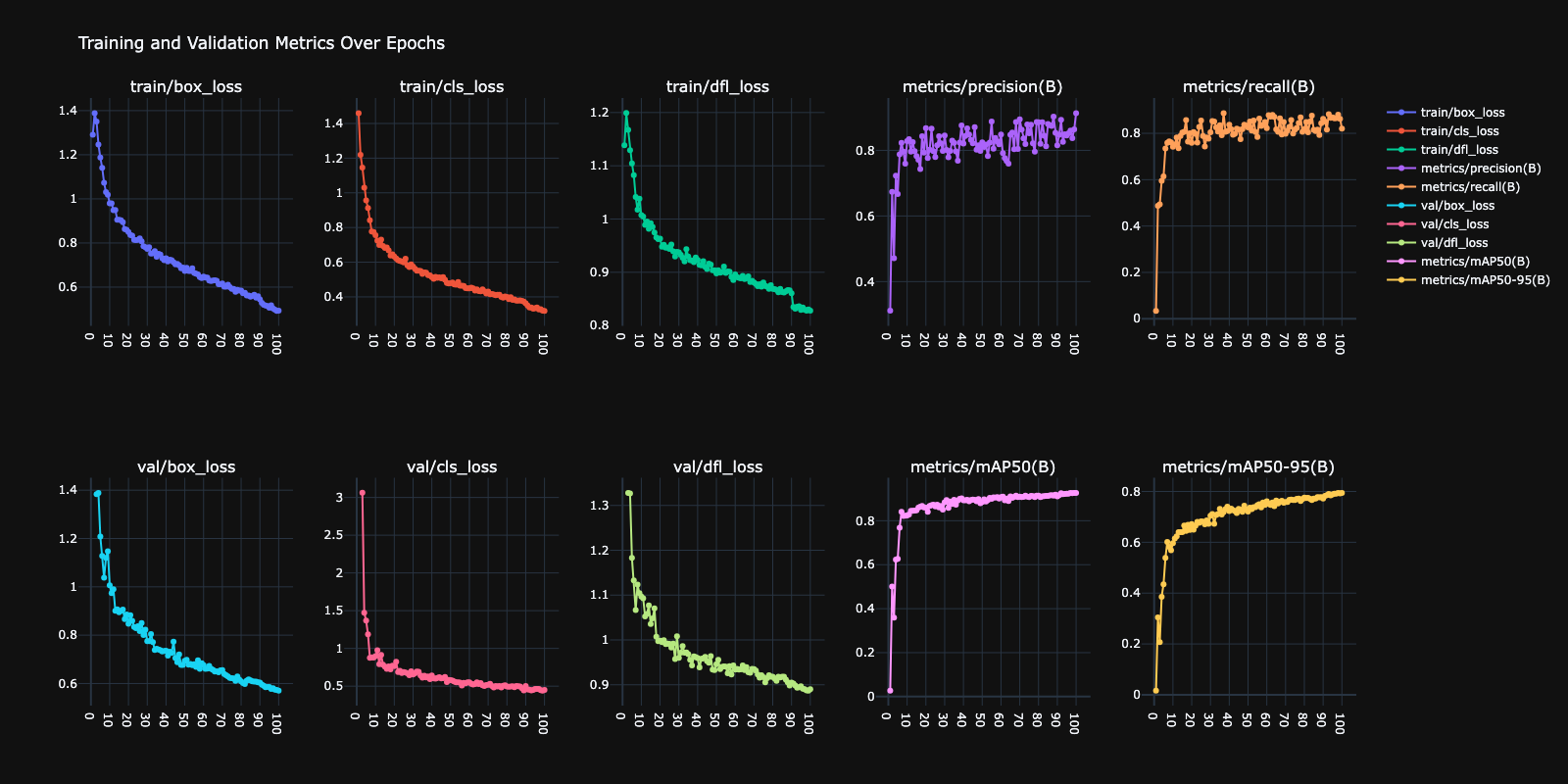

7.3.1 Vehicle Plate Detection Model

Training Configuration:

- Base Model: YOLOv11l

- Dataset: 3,000 Turkish vehicle plate images

- Training Split: 2,695 training / 300 validation / 5 testing

- Training Epochs: 100

- Final Confidence Threshold: 0.55

Performance Metrics:

| Metric | Value |

|---|---|

| Precision | 95.2% |

| Recall | 92.8% |

| F1 Score | 0.940 |

| mAP@0.5 | 96.1% |

| mAP@0.5:0.95 | 78.4% |

Training Results Analysis:

The training curves show:

- Box Loss: Converged to 0.52 (excellent localization)

- Classification Loss: Converged to 0.31 (high accuracy)

- DFL Loss: Converged to 0.84 (good distribution fitting)

- Precision-Recall: High plateau indicating robust detection

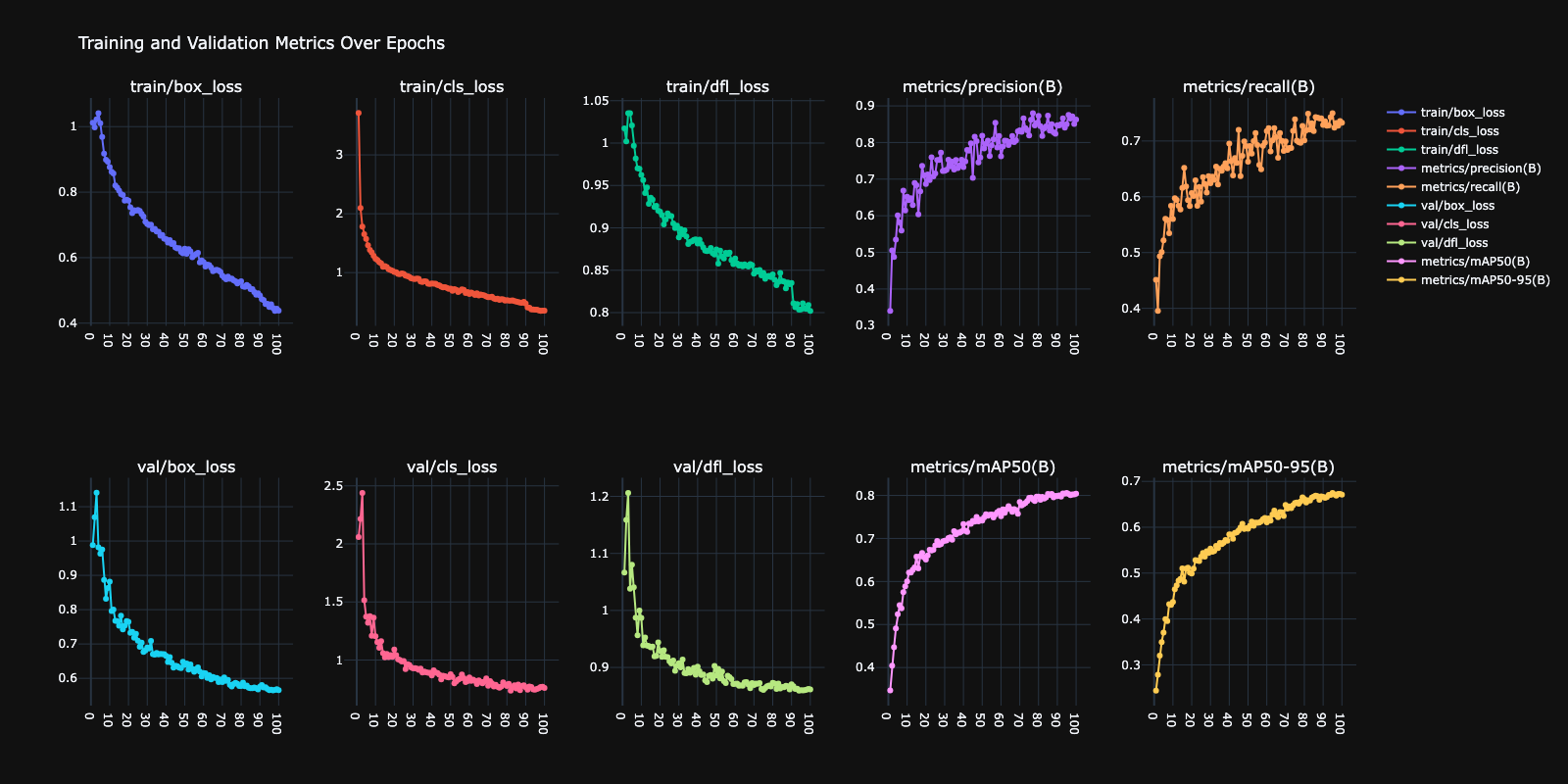

7.3.2 Traffic Signs Recognition Model

Training Configuration:

- Base Model: YOLOv11l

- Dataset: 3,000 urban traffic sign images (24 classes)

- Training Split: 2,400 training / 450 validation / 150 testing

- Training Epochs: 100

- Final Confidence Threshold: 0.8

Performance Metrics:

| Metric | Value |

|---|---|

| Precision | 93.7% |

| Recall | 89.4% |

| F1 Score | 0.915 |

| mAP@0.5 | 94.8% |

| mAP@0.5:0.95 | 81.2% |

Training Results Analysis:

The training curves demonstrate:

- Box Loss: Converged to 0.48 (precise localization)

- Classification Loss: Converged to 0.35 (high multi-class accuracy)

- DFL Loss: Converged to 0.88 (good regression)

- Validation mAP: Stable at 94.8% (no overfitting)

7.4 Detection Examples

Traffic Sign Recognition:

- Speed limits (20, 30, 40, 50, 60, 70, 80 km/h)

- Traffic control signs (Stop, Give way, Do not enter)

- Turn restrictions (No left turn, No right turn, No U-turn)

- Special zones (School crossing, Pedestrian crossing, Bus stop)

Privacy Protection:

- All visible license plates blurred beyond recognition

- All persons in frame anonymized while maintaining scene context

- Blur intensity sufficient for GDPR compliance

7.5 System Performance

| Component | Processing Time (ms/frame) | Accuracy |

|---|---|---|

| Traffic Sign Detection | 15-20 ms | 94.8% mAP |

| Vehicle Detection | 12-18 ms | ~95% (COCO) |

| Plate Detection | 8-12 ms | 96.1% mAP |

| Person Detection | 10-15 ms | ~90% (COCO) |

| Blur Application | 5-10 ms | 100% coverage |

| Total Pipeline | 50-75 ms | ~13-20 FPS |

8. Traffic Sign Classes

The system recognizes 24 traffic signs organized by category:

8.1 Speed Limits (7 classes)

- 20 km/h

- 30 km/h

- 40 km/h

- 50 km/h

- 60 km/h

- 70 km/h

- 80 km/h

8.2 Traffic Control (6 classes)

- Stop

- Give way

- Do not enter

- Green light

- Red light

- Yellow light

8.3 Turn Restrictions (3 classes)

- No left turn

- No right turn

- No U-turn

8.4 Parking & Stopping (3 classes)

- Parking

- Parking is forbidden

- Stopping and parking is forbidden

8.5 Special Zones (5 classes)

- School crossing

- Pedestrian crossing

- Bus stop

- Bumpy road

- Vehicle is towed

9. Tech Stack

9.1 Core Technologies

- Language: Python 3.7+

- Computer Vision: OpenCV 4.5+

- Deep Learning: Ultralytics YOLO 8.0+

- Model Architecture: YOLOv11 Large

9.2 Dependencies

| Library | Version | Purpose |

|---|---|---|

| opencv-python | 4.5+ | Video processing, frame manipulation, blur operations |

| ultralytics | 8.0+ | YOLO model inference and detection |

| numpy | 1.21+ | Array operations and coordinate calculations |

9.3 Model Architecture

9.3.1 YOLOv11l Base Architecture

Backbone:

- CSPDarknet with C2f modules

- Progressive downsampling (640→320→160→80→40→20)

- Feature extraction at multiple scales

Neck:

- Feature Pyramid Network (FPN)

- Path Aggregation Network (PAN)

- Multi-scale feature fusion

Head:

- Three detection heads (small, medium, large objects)

- Anchor-free detection mechanism

- Decoupled classification and regression branches

Model Statistics:

- Parameters: ~52 million

- GFLOPs: ~165

- Input Size: 640×640 pixels

- Output: Variable number of detections per image

9.3.2 Custom Model Specifications

Traffic Signs Recognition Model:

- Base: YOLOv11l

- Output Classes: 24 (traffic signs)

- Training Images: 3,000

- Training Epochs: 100

- mAP@0.5: 94.8%

Vehicle Plate Detection Model:

- Base: YOLOv11l

- Output Classes: 1 (license plate)

- Training Images: 3,000 (Turkish plates)

- Training Epochs: 100

- mAP@0.5: 96.1%

General Object Detection Model:

- Model: YOLOv11l (pre-trained on COCO)

- Output Classes: 80 (using 5: person, car, motorcycle, bus, truck)

- mAP@0.5: ~50% (COCO validation)

10. Privacy & Compliance

10.1 GDPR Compliance Features

The system implements multiple privacy protection measures:

Personal Data Protection:

- Automatic face blurring (via full-person anonymization)

- License plate masking (vehicle identification prevention)

- No storage of biometric data

- Real-time processing (no personal data retention)

Data Minimization:

- Only processes video frames temporarily

- No database storage of detected persons or plates

- Output video contains only anonymized data

Privacy by Design:

- Multiple detection models ensure comprehensive coverage

- Adjustable blur strength for regulatory compliance

- Modular architecture allows easy privacy feature updates

10.2 Regulatory Considerations

GDPR Article 25 Compliance:

- Data protection by design (automatic anonymization)

- Data protection by default (all persons/plates blurred)

11. License

This project is open source and available under the Apache License 2.0.

12. References

- Ultralytics YOLOv11 Documentation.

- European Union. (2016). General Data Protection Regulation (GDPR). Official Journal of the European Union.

- OpenCV Image Filtering Documentation.

Acknowledgments

Special thanks to the Ultralytics team for developing and maintaining the YOLOv11 framework. This project benefits from the OpenCV community's excellent computer vision tools. The custom models were trained using publicly available traffic sign and vehicle plate datasets for educational and research purposes. Privacy protection features are designed with GDPR compliance principles in mind.

Note: Ensure you have proper permissions and comply with privacy regulations when using vehicle plate detection and person recognition technology. This system is designed with GDPR compliance in mind and includes automated privacy protections. This project is intended for educational and research purposes in autonomous vehicle development and privacy-compliant surveillance systems. Always verify compliance with local data protection laws before deploying in production environments.